Can Large Language Models Solve Optimisation Problems?

A new study explores how GPT-3.5 can generate and debug optimisation models in MiniZinc, bringing us closer to automated problem-solving.

Photo by Growtika on Unsplash

Can Large Language Models Solve Optimisation Problems?

Everyday life is full of optimisation problems, even if we don’t notice them. Airlines must design flight schedules that minimise costs while keeping passengers moving. Hospitals juggle beds, staff, and equipment to deliver timely care. Supermarkets decide how to transport goods efficiently from warehouses to stores.

Behind the scenes, all of these challenges rely on mathematical optimisation models—formal descriptions of a problem that allow computers to search for the best possible solution under a set of constraints. Building such models, however, requires highly specialised knowledge. Learning a modelling language like MiniZinc can take months, and even seasoned experts spend hours debugging errors.

But what if an AI could do that for us?

The Idea: Let the AI Do the Heavy Lifting

A recent study tested whether large language models (LLMs), such as GPT-3.5, can automatically generate optimisation models from simple natural-language instructions. Instead of manually coding variables, constraints, and objectives, a user can describe the problem in plain English—for example, “a model with 10 discrete variables without constraints”.

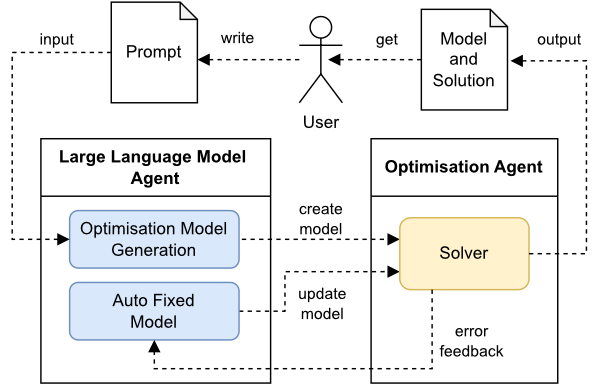

The system then works in two stages:

- Model generation – GPT-3.5 writes a draft MiniZinc program based on the user’s description.

- Validation and repair – The program is tested by an optimisation solver. If it fails, the error message is sent back to the AI, which tries to correct the code automatically. This loop continues until the solver produces a valid result or the process stalls.

This creates a semi-automatic pipeline where the AI acts as both programmer and debugger, translating high-level instructions into runnable optimisation models.

Putting GPT-3.5 to the Test

To evaluate the idea, ten different optimisation scenarios were tested. The first half involved problems with individual discrete variables, while the second half used arrays of variables with additional constraints.

For each case, the AI received only a short text prompt describing the model requirements. The generated MiniZinc code was then compiled and tested. If the model ran successfully and matched the requested specification, it was marked as correct.

The results were mixed but encouraging:

- Five models were valid and correct on the first or second attempt.

- Three models compiled and ran but failed to fully meet the requested conditions.

- Two models contained errors related to missing libraries, which made them unusable despite looking reasonable at first glance.

In one notable example, the AI generated a model with an all_different constraint—commonly used in scheduling and assignment problems—but forgot to include the corresponding MiniZinc library. The program looked fine to a casual reader, but the solver could not run it. This highlights both the power and the fragility of using LLMs for technical tasks.

Why It Matters

The study shows that LLMs can already perform parts of the modelling process that were once the exclusive domain of experts. By converting natural language into structured optimisation code, these systems could lower the barrier to entry for non-specialists. A doctor, engineer, or farmer could, in principle, describe their problem to an AI and receive a ready-to-run model without ever learning a modelling language.

This has the potential to accelerate innovation in industries such as healthcare, energy, transportation, and logistics—fields where optimisation is critical but technical expertise is scarce.

At the same time, the limitations are clear. The AI often produces plausible-looking but subtly flawed models, and its ability to correct itself depends heavily on the quality of error messages. Reliability remains a major challenge before such systems can be trusted in real-world applications.

Looking Ahead

Future research could explore more advanced language models, test alternative optimisation languages, and refine the feedback loop between solvers and AI. Improvements in error handling, or hybrid approaches where humans and machines collaborate, might close the gap between promising prototypes and practical tools.

For now, the findings serve as proof of concept: LLMs can indeed generate useful optimisation models, even if they are not yet consistent enough to replace human expertise. Instead, they may become powerful assistants—speeding up routine tasks, offering quick prototypes, and helping users navigate the complex world of mathematical optimisation.

References

Research by: Boris Leonardo

Published: May 9, 2023 | Platform: alphaXiv preprint

DOI: 10.48550/arxiv.2305.05811

Key Findings:

- GPT-3.5 successfully generated valid MiniZinc optimization models in 50% of test cases

- Three additional models (30%) compiled but didn’t fully meet specifications

- Two models (20%) failed due to missing library imports, highlighting the importance of technical accuracy

- Demonstrated a two-stage approach: automatic model generation followed by iterative debugging

- Results suggest LLMs can serve as powerful assistants for optimization modeling, though human oversight remains essential

Why this matters: This work represents one of the first systematic evaluations of large language models for automatic optimization model generation, opening new possibilities for democratizing mathematical optimization tools and making them accessible to non-experts across various industries.